Review:

The Last Battle, by C.S. Lewis

| Illustrator: |

Pauline Baynes |

| Series: |

Chronicles of Narnia #7 |

| Publisher: |

Collier Books |

| Copyright: |

1956 |

| Printing: |

1978 |

| ISBN: |

0-02-044210-6 |

| Format: |

Mass market |

| Pages: |

184 |

The Last Battle is the seventh and final book of the

Chronicles of Narnia in every reading order. It ties together (and

spoils) every previous Narnia book, so you do indeed want to read it last

(or skip it entirely, but I'll get into that).

In the far west of Narnia, beyond the Lantern Waste and near the great

waterfall that marks Narnia's western boundary, live a talking ape named

Shift and a talking donkey named Puzzle. Shift is a narcissistic asshole

who has been gaslighting and manipulating Puzzle for years, convincing the

poor donkey that he's stupid and useless for anything other than being

Shift's servant. At the start of the book, a lion skin washes over the

waterfall and into the Cauldron Pool. Shift, seeing a great opportunity,

convinces Puzzle to retrieve it.

The king of Narnia at this time is Tirian. I would tell you more about

Tirian except, despite being the protagonist, that's about all the

characterization he gets. He's the king, he's broad-shouldered and

strong, he behaves in a correct kingly fashion by preferring hunting

lodges and simple camps to the capital at Cair Paravel, and his close

companion is a unicorn named Jewel. Other than that, he's another

character like Rilian from

The Silver

Chair who feels like he was taken from a medieval Arthurian story.

(Thankfully, unlike Rilian, he doesn't talk like he's in a medieval

Arthurian story.)

Tirian finds out about Shift's scheme when a dryad appears at Tirian's

camp, calling for justice for the trees of Lantern Waste who are being

felled. Tirian rushes to investigate and stop this monstrous act, only to

find the beasts of Narnia cutting down trees and hauling them away for

Calormene overseers. When challenged on why they would do such a thing,

they reply that it's at Aslan's orders.

The Last Battle is largely the reason why I decided to do this

re-read and review series. It is, let me be clear, a bad book. The plot

is absurd, insulting to the characters, and in places actively offensive.

It is also, unlike the rest of the Narnia series, dark and depressing for

nearly all of the book. The theology suffers from problems faced by

modern literature that tries to use the Book of Revelation and related

Christian mythology as a basis. And it is, most famously, the site of one

of the most notorious authorial betrayals of a character in fiction.

And yet,

The Last Battle, probably more than any other single book,

taught me to be a better human being. It contains two very specific

pieces of theology that I would now critique in multiple ways but which

were exactly the pieces of theology that I needed to hear when I first

understood them. This book steered me away from a closed, judgmental, and

condemnatory mindset at exactly the age when I needed something to do

that. For that, I will always have a warm spot in my heart for it.

I'm going to start with the bad parts, though, because that's how the book

starts.

MAJOR SPOILERS BELOW.

First, and most seriously, this is a

second-order

idiot plot. Shift shows up with a donkey wearing a lion skin (badly),

only lets anyone see him via firelight, claims he's Aslan, and starts

ordering the talking animals of Narnia to completely betray their laws and

moral principles and reverse every long-standing political position of the

country... and everyone just nods and goes along with this. This is the

most blatant example of a long-standing problem in this series: Lewis does

not respect his animal characters. They are the best feature of his

world, and he treats them as barely more intelligent than their

non-speaking equivalents and in need of humans to tell them what to do.

Furthermore, despite the assertion of the narrator, Shift is not even

close to clever. His deception has all the subtlety of a five-year-old

who doesn't want to go to bed, and he offers the Narnians absolutely

nothing in exchange for betraying their principles. I can forgive Puzzle

for going along with the scheme since Puzzle has been so emotionally

abused that he doesn't know what else to do, but no one else has any

excuse, especially Shift's neighbors. Given his behavior in the book,

everyone within a ten mile radius would be so sick of his whining,

bullying, and lying within a month that they'd never believe anything he

said again. Rishda and Ginger, a Calormene captain and a sociopathic cat

who later take over Shift's scheme, do qualify as clever, but there's no

realistic way Shift's plot would have gotten far enough for them to get

involved.

The things that Shift gets the Narnians to do are awful. This is by far

the most depressing book in the series, even more than the worst parts of

The Silver Chair. I'm sure I'm not the only one who struggled to

read through the first part of this book, and raced through it on re-reads

because everything is so hard to watch. The destruction is wanton and

purposeless, and the frequent warnings from both characters and narration

that these are the last days of Narnia add to the despair. Lewis takes

all the beautiful things that he built over six books and smashes them

before your eyes. It's a lot to take, given that previous books would

have treated the felling of a single tree as an unspeakable catastrophe.

I think some of these problems are due to the difficulty of using

Christian eschatology in a modern novel. An antichrist is obligatory, but

the animals of Narnia have no reason to follow an antichrist given their

direct experience with Aslan, particularly not the aloof one that Shift

tries to give them. Lewis forces the plot by making everyone act stupidly

and out of character. Similarly, Christian eschatology says everything

must become as awful as possible right before the return of Christ, hence

the difficult-to-read sections of Narnia's destruction, but there's no

in-book reason for the Narnians' complicity in that destruction. One can

argue about whether this is good theology, but it's certainly bad

storytelling.

I can see the outlines of the moral points Lewis is trying to make about

greed and rapacity, abuse of the natural world, dubious alliances,

cynicism, and ill-chosen prophets, but because there is no explicable

reason for Tirian's quiet kingdom to suddenly turn to murderous resource

exploitation, none of those moral points land with any force. The best

moral apocalypse shows the reader how, were they living through it, they

would be complicit in the devastation as well. Lewis does none of that

work, so the reader is just left angry and confused.

The book also has several smaller poor authorial choices, such as the

blackface incident. Tirian, Jill, and Eustace need to infiltrate Shift's

camp, and use blackface to disguise themselves as Calormenes. That alone

uncomfortably reveals how much skin tone determines nationality in this

world, but Lewis makes it far worse by having Tirian comment that he

"feel[s] a true man again" after removing the blackface and switching to

Narnian clothes.

All of this drags on and on, unlike Lewis's normally tighter pacing, to

the point that I remembered this book being twice the length of any other

Narnia book. It's not; it's about the same length as the rest, but it's

such a grind that it feels interminable. The sum total of the bright

points of the first two-thirds of the book are the arrival of Jill and

Eustace, Jill's one moment of true heroism, and the loyalty of a single

Dwarf. The rest is all horror and betrayal and doomed battles and abject

stupidity.

I do, though, have to describe Jill's moment of glory, since I complained

about her and Eustace throughout

The Silver Chair. Eustace is

still useless, but Jill learned forestcraft during her previous adventures

(not that we saw much sign of this previously) and slips through the

forest like a ghost to steal Puzzle and his lion costume out from the

under the nose of the villains. Even better, she finds Puzzle and the

lion costume hilarious, which is the one moment in the book where one of

the characters seems to understand how absurd and ridiculous this all is.

I loved Jill so much in that moment that it makes up for all of the

pointless bickering of

The Silver Chair. She doesn't get to do

much else in this book, but I wish the Jill who shows up in

The Last

Battle had gotten her own book.

The end of this book, and the only reason why it's worth reading, happens

once the heroes are forced into the stable that Shift and his

co-conspirators have been using as the stage for their fake Aslan. Its

door (for no well-explained reason) has become a door to Aslan's Country

and leads to a reunion with all the protagonists of the series. It also

becomes the frame of Aslan's final destruction of Narnia and judging of

its inhabitants, which I suspect would be confusing if you didn't already

know something about Christian eschatology. But before that, this

happens, which is sufficiently and deservedly notorious that I think it

needs to be quoted in full.

"Sir," said Tirian, when he had greeted all these. "If I have read the

chronicle aright, there should be another. Has not your Majesty two

sisters? Where is Queen Susan?"

"My sister Susan," answered Peter shortly and gravely, "is no longer a

friend of Narnia."

"Yes," said Eustace, "and whenever you've tried to get her to come and

talk about Narnia or do anything about Narnia, she says 'What

wonderful memories you have! Fancy your still thinking about all

those funny games we used to play when we were children.'"

"Oh Susan!" said Jill. "She's interested in nothing nowadays except

nylons and lipstick and invitations. She always was a jolly sight too

keen on being grown-up."

"Grown-up indeed," said the Lady Polly. "I wish she would grow

up. She wasted all her school time wanting to be the age she is now,

and she'll waste all the rest of her life trying to stay that age.

Her whole idea is to race on to the silliest time of one's life as

quick as she can and then stop there as long as she can."

There are so many obvious and dire problems with this passage, and so many

others have written about it at length, that I will only add a few points.

First, I find it interesting that neither Lucy nor Edmund says a thing.

(I would like to think that Edmund knows better.) The real criticism

comes from three characters who never interacted with Susan in the series:

the two characters introduced after she was no longer allowed to return to

Narnia, and a character from the story that predated hers. (And Eustace

certainly has some gall to criticize someone else for treating Narnia as a

childish game.)

It also doesn't say anything good about Lewis that he puts his rather

sexist attack on Susan into the mouths of two other female characters.

Polly's criticism is a somewhat generic attack on puberty that could

arguably apply to either sex (although "silliness" is usually reserved for

women), but Jill makes the attack explicitly gendered. It's the attack of

a girl who wants to be one of the boys on a girl who embraces things that

are coded feminine, and there's a whole lot of politics around the

construction of gender happening here that Lewis is blindly reinforcing

and not grappling with at all.

Plus, this is only barely supported by single sentences in

The Voyage of the Dawn Treader and

The Horse and His Boy and directly

contradicts the earlier books. We're expected to believe that Susan the

archer, the best swimmer, the most sensible and thoughtful of the four

kids has abruptly changed her whole personality. Lewis could have made me

believe Susan had soured on Narnia after the attempted kidnapping (and,

although left unstated, presumably eventual attempted rape) in

The

Horse and His Boy, if one ignores the fact that incident supposedly

happens before

Prince Caspian where

there is no sign of such a reaction. But not for those reasons, and not

in that way.

Thankfully, after this, the book gets better, starting with the Dwarfs,

which is one of the two passages that had a profound influence on me.

Except for one Dwarf who allied with Tirian, the Dwarfs reacted to the

exposure of Shift's lies by disbelieving both Tirian and Shift, calling a

pox on both their houses, and deciding to make their own side. During the

last fight in front of the stable, they started killing whichever side

looked like they were winning. (Although this is horrific in the story, I

think this is accurate social commentary on a certain type of cynicism,

even if I suspect Lewis may have been aiming it at atheists.) Eventually,

they're thrown through the stable door by the Calormenes. However, rather

than seeing the land of beauty and plenty that everyone else sees, they

are firmly convinced they're in a dark, musty stable surrounded by refuse

and dirty straw.

This is, quite explicitly, not something imposed on them. Lucy rebukes

Eustace for wishing Tash had killed them, and tries to make friends with

them. Aslan tries to show them how wrong their perceptions are, to no

avail. Their unwillingness to admit they were wrong is so strong that

they make themselves believe that everything is worse than it actually is.

"You see," said Aslan. "They will not let us help them. They have

chosen cunning instead of belief. Their prison is only in their own

minds, yet they are in that prison; and so afraid of being taken in

that they cannot be taken out."

I grew up with the US evangelical version of Hell as a place of eternal

torment, which in turn was used to justify religious atrocities in the

name of saving people from Hell. But there is no Hell of that type in

this book. There is a shadow into which many evil characters simply

disappear, and there's this passage. Reading this was the first time I

understood the alternative idea of Hell as the absence of God instead of

active divine punishment. Lewis doesn't use the word "Hell," but it's

obvious from context that the Dwarfs are in Hell. But it's not something

Aslan does to them and no one wants them there; they could leave any time

they wanted, but they're too unwilling to be wrong.

You may have to be raised in conservative Christianity to understand how

profoundly this rethinking of Hell (which Lewis tackles at greater length

in

The Great Divorce) undermines the system of guilt and fear

that's used as motivation and control. It took me several re-readings and

a lot of thinking about this passage, but this is where I stopped

believing in a vengeful God who will eternally torture nonbelievers, and

thus stopped believing in all of the other theology that goes with it.

The second passage that changed me is Emeth's story. Emeth is a devout

Calormene, a follower of Tash, who volunteered to enter the stable when

Shift and his co-conspirators were claiming Aslan/Tash was inside. Some

time after going through, he encounters Aslan, and this is part of his

telling of that story (and yes, Lewis still has Calormenes telling stories

as if they were British translators of the

Arabian Nights):

[...] Lord, is it then true, as the Ape said, that thou and Tash are

one? The Lion growled so that the earth shook (but his wrath was not

against me) and said, It is false. Not because he and I are one, but

because we are opposites, I take to me the services which thou hast

done to him. For I and he are of such different kinds that no service

which is vile can be done to me, and none which is not vile can be

done to him. Therefore if any man swear by Tash and keep his oath for

the oath's sake, it is by me that he has truly sworn, though he know

it not, and it is I who reward him. And if any man do a cruelty in my

name, then, though he says the name Aslan, it is Tash whom he serves

and by Tash his deed is accepted. Dost thou understand, Child? I

said, Lord, thou knowest how much I understand. But I said also (for

the truth constrained me), Yet I have been seeking Tash all my days.

Beloved, said the Glorious One, unless thy desire had been for me,

thou wouldst not have sought so long and so truly. For all find what

they truly seek.

So, first, don't ever say this to anyone. It's horribly condescending

and, since it's normally said by white Christians to other people, usually

explicitly colonialist. Telling someone that their god is evil but since

they seem to be a good person they're truly worshiping your god is only

barely better than saying yours is the only true religion.

But it

is better, and as someone who, at the time, was wholly

steeped in the belief that only Christians were saved and every follower

of another religion was following Satan and was damned to Hell, this

passage

blew my mind. This was the first place I encountered the

idea that someone who followed a different religion could be saved, or

that God could transcend religion, and it came with exactly the context

and justification that I needed given how close-minded I was at the time.

Today, I would say that the Christian side of this analysis needs far more

humility, and fobbing off all the evil done in the name of the Christian

God by saying "oh, those people were really following Satan" is a total

moral copout. But, nonetheless, Lewis opened a door for me that I was

able to step through and move beyond to a less judgmental, dismissive, and

hostile view of others.

There's not much else in the book after this. It's mostly Lewis's

charmingly Platonic view of the afterlife, in which the characters go

inward and upward to truer and more complete versions of both Narnia and

England and are reunited (very briefly) with every character of the

series. Lewis knows not to try too hard to describe the indescribable,

but it remains one of my favorite visions of an afterlife because it makes

so explicit that this world is neither static or the last, but only the

beginning of a new adventure.

This final section of

The Last Battle is deeply flawed, rather

arrogant, a little bizarre, and involves more lectures on theology than

precise description, but I still love it. By itself, it's not a bad

ending for the series, although I don't think it has half the beauty or

wonder of the end of

The Voyage of the Dawn Treader. It's a shame

about the rest of the book, and it's a worse shame that Lewis chose to

sacrifice Susan on the altar of his prejudices. Those problems made it

very hard to read this book again and make it impossible to recommend.

Thankfully, you can read the series without it, and perhaps most readers

would be better off imagining their own ending (or lack of ending) to

Narnia than the one Lewis chose to give it.

But the one redeeming quality

The Last Battle will always have for

me is that, despite all of its flaws, it was exactly the book that I

needed to read when I read it.

Rating: 4 out of 10

As I mentioned last time, bearer tokens are not super compatible with a model in which every access is verified to ensure it's coming from a trusted device. Let's talk about that in a bit more detail.

As I mentioned last time, bearer tokens are not super compatible with a model in which every access is verified to ensure it's coming from a trusted device. Let's talk about that in a bit more detail.

Sony RX100-III, relegated to a webcam

Sony RX100-III, relegated to a webcam

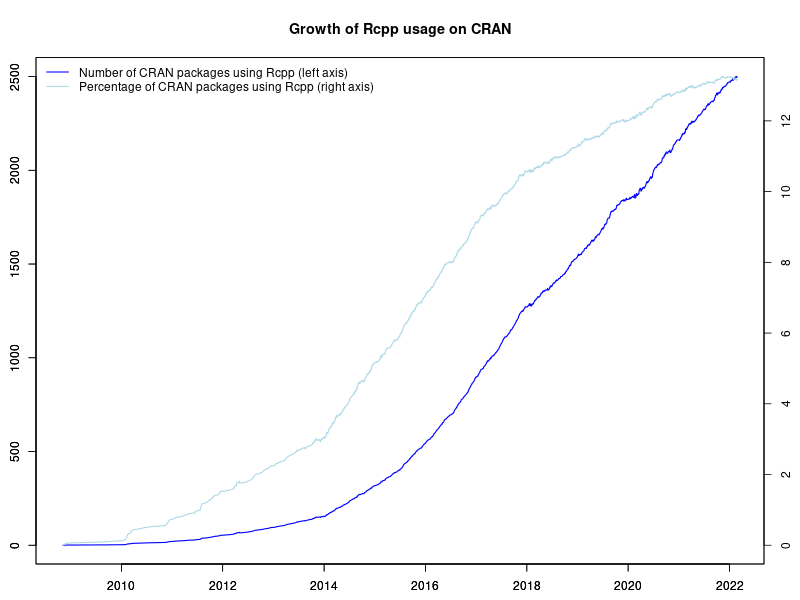

As many of you may be aware, I work with

As many of you may be aware, I work with

As of this morning,

As of this morning,  There is an saying that you can take the boy out of the valley, but you cannot the valley out of the boy so for those of us who spent a decade or two in finance and on trading floors, having some market price information available becomes second nature. And/or sometimes it is just good fun to program this.

A good while back

There is an saying that you can take the boy out of the valley, but you cannot the valley out of the boy so for those of us who spent a decade or two in finance and on trading floors, having some market price information available becomes second nature. And/or sometimes it is just good fun to program this.

A good while back  This is quite mesmerizing when you just run two command-line clients (in a

This is quite mesmerizing when you just run two command-line clients (in a

This has ended up longer than I expected. I ll write up posts about some of the individual steps with some more details at some point, but this is an overview of the yak shaving I engaged in. The TL;DR is:

This has ended up longer than I expected. I ll write up posts about some of the individual steps with some more details at some point, but this is an overview of the yak shaving I engaged in. The TL;DR is:

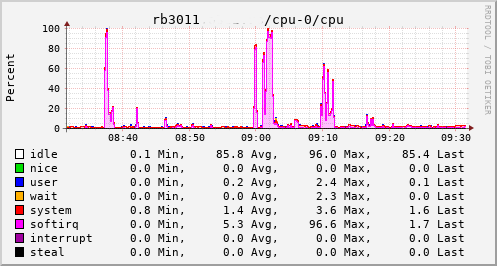

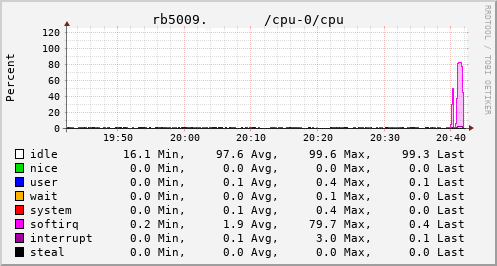

This provided an opportunity to see just what the RB3011 could actually manage. In the configuration I had it turned out to be not much more than the 80Mb/s speeds I had previously seen. The upload jumped from a solid 20Mb/s to 75Mb/s, so I knew the regrade had actually happened. Looking at CPU utilisation clearly showed the problem; softirqs were using almost 100% of a CPU core.

Now, the way the hardware is setup on the RB3011 is that there are two separate 5 port switches, each connected back to the CPU via a separate GigE interface. For various reasons I had everything on a single switch, which meant that all traffic was boomeranging in and out of the same CPU interface. The IPQ8064 has dual cores, so I thought I d try moving the external connection to the other switch. That puts it on its own GigE CPU interface, which then allows binding the interrupts to a different CPU core. That helps; throughput to the outside world hits 140Mb/s+. Still a long way from the expected max, but proof we just need more grunt.

This provided an opportunity to see just what the RB3011 could actually manage. In the configuration I had it turned out to be not much more than the 80Mb/s speeds I had previously seen. The upload jumped from a solid 20Mb/s to 75Mb/s, so I knew the regrade had actually happened. Looking at CPU utilisation clearly showed the problem; softirqs were using almost 100% of a CPU core.

Now, the way the hardware is setup on the RB3011 is that there are two separate 5 port switches, each connected back to the CPU via a separate GigE interface. For various reasons I had everything on a single switch, which meant that all traffic was boomeranging in and out of the same CPU interface. The IPQ8064 has dual cores, so I thought I d try moving the external connection to the other switch. That puts it on its own GigE CPU interface, which then allows binding the interrupts to a different CPU core. That helps; throughput to the outside world hits 140Mb/s+. Still a long way from the expected max, but proof we just need more grunt.

Which brings us to this past weekend, when, having worked out all the other bits, I tried the squashfs root image again on the RB3011. Success! The home automation bits connected to it, the link to the outside world came up, everything seemed happy. So I double checked my bootloader bits on the RB5009, brought it down to the comms room and plugged it in instead. And, modulo my failing to update the nftables config to allow it to do forwarding, it all came up ok. Some testing with iperf3 internally got a nice 912Mb/s sustained between subnets, and some less scientific testing with wget + speedtest-cli saw speeds of over 460Mb/s to the outside world.

Time from ordering the router until it was in service? Just under 8 weeks

Which brings us to this past weekend, when, having worked out all the other bits, I tried the squashfs root image again on the RB3011. Success! The home automation bits connected to it, the link to the outside world came up, everything seemed happy. So I double checked my bootloader bits on the RB5009, brought it down to the comms room and plugged it in instead. And, modulo my failing to update the nftables config to allow it to do forwarding, it all came up ok. Some testing with iperf3 internally got a nice 912Mb/s sustained between subnets, and some less scientific testing with wget + speedtest-cli saw speeds of over 460Mb/s to the outside world.

Time from ordering the router until it was in service? Just under 8 weeks

Should online communities require people to create accounts before participating?

This question has been a source of disagreement among people who start or manage online communities for decades. Requiring accounts makes some sense since users contributing without accounts are a common source of vandalism, harassment, and low quality content. In theory, creating an account can deter these kinds of attacks while still making it pretty quick and easy for newcomers to join. Also, an account requirement seems unlikely to affect contributors who already have accounts and are typically the source of most valuable contributions. Creating accounts might even help community members build deeper relationships and commitments to the group in ways that lead them to stick around longer and contribute more.

Should online communities require people to create accounts before participating?

This question has been a source of disagreement among people who start or manage online communities for decades. Requiring accounts makes some sense since users contributing without accounts are a common source of vandalism, harassment, and low quality content. In theory, creating an account can deter these kinds of attacks while still making it pretty quick and easy for newcomers to join. Also, an account requirement seems unlikely to affect contributors who already have accounts and are typically the source of most valuable contributions. Creating accounts might even help community members build deeper relationships and commitments to the group in ways that lead them to stick around longer and contribute more.

github activity october 2013 to october 2014

github activity october 2013 to october 2014

github activity october 2014 to october 2015

github activity october 2014 to october 2015

github activity october 2015 to october 2016

github activity october 2015 to october 2016

github activity october 2016 to october 2017

github activity october 2016 to october 2017

github activity october 2017 to october 2018

github activity october 2017 to october 2018

github activity october 2018 to october 2019

github activity october 2018 to october 2019

github activity october 2019 to october 2020

github activity october 2019 to october 2020

github activity october 2020 to october 2021

github activity october 2020 to october 2021

We also don t know if this reversal was human or algorithmic, but that still is beside the point.

The point is, Facebook intentionally chooses to surface and promote those things that drive engagement, regardless of quality.

Clearly many have wondered if tens of thousands of people have died unnecessary deaths over COVID as a result. One whistleblower says

We also don t know if this reversal was human or algorithmic, but that still is beside the point.

The point is, Facebook intentionally chooses to surface and promote those things that drive engagement, regardless of quality.

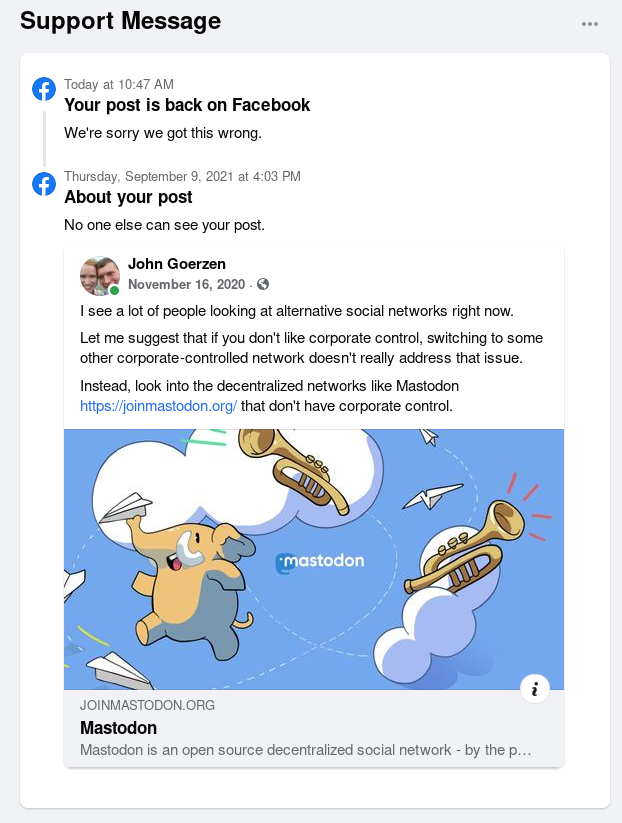

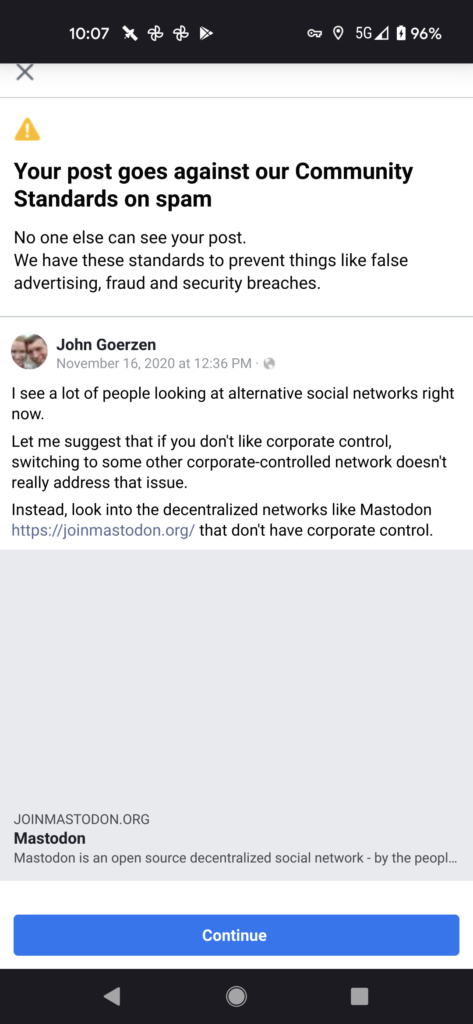

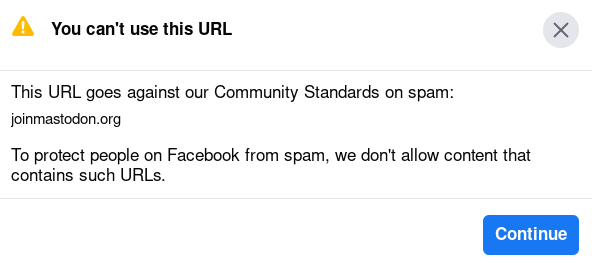

Clearly many have wondered if tens of thousands of people have died unnecessary deaths over COVID as a result. One whistleblower says  Wonder with me for a second what this one-off post I composed myself might have done to trip Facebook s filter . and it is probably obvious that what tripped the filter was the mention of an open source competitor, even though Facebook is much more enormous than Mastodon. I have been a member of Facebook for many years, and this is the one and only time anything like that has happened.

Why they decided today to take down that post I have no idea.

In case you wondered about their sincerity towards stamping out misinformation which, on the rare occasions they do something about, they deprioritize rather than remove as they did here this probably answers your question. Or, are they sincere about thinking they re such a force for good by connecting the world s people? Well, only so long as the world s people don t say nice things about alternatives to Facebook, I guess.

Well, you might be wondering, Why not appeal, since they obviously made a mistake? Because, of course, you can t:

Wonder with me for a second what this one-off post I composed myself might have done to trip Facebook s filter . and it is probably obvious that what tripped the filter was the mention of an open source competitor, even though Facebook is much more enormous than Mastodon. I have been a member of Facebook for many years, and this is the one and only time anything like that has happened.

Why they decided today to take down that post I have no idea.

In case you wondered about their sincerity towards stamping out misinformation which, on the rare occasions they do something about, they deprioritize rather than remove as they did here this probably answers your question. Or, are they sincere about thinking they re such a force for good by connecting the world s people? Well, only so long as the world s people don t say nice things about alternatives to Facebook, I guess.

Well, you might be wondering, Why not appeal, since they obviously made a mistake? Because, of course, you can t:

Indeed I did tick a box that said I disagreed, but there was no place to ask why or to question their action.

So what would cause a non-controversial post from a long-time Facebook member that has never had anything like this happen, to disappear?

Greed. Also fear.

Maybe I d feel sorry for them if they weren t acting like a bully.

Edit: There are reports from several others on Mastodon of the same happening this week. I am trying to gather more information. It sounds like it may be happening on Twitter as well.

Edit 2: And here are

Indeed I did tick a box that said I disagreed, but there was no place to ask why or to question their action.

So what would cause a non-controversial post from a long-time Facebook member that has never had anything like this happen, to disappear?

Greed. Also fear.

Maybe I d feel sorry for them if they weren t acting like a bully.

Edit: There are reports from several others on Mastodon of the same happening this week. I am trying to gather more information. It sounds like it may be happening on Twitter as well.

Edit 2: And here are  Anyone else seeing it?

Edit 4: It is far more than just me, clearly. More reports are out there; for instance,

Anyone else seeing it?

Edit 4: It is far more than just me, clearly. More reports are out there; for instance,  This is not only the Year of the Ox, but also the year of Debian 11, code-named

bullseye. The release lies ahead,

This is not only the Year of the Ox, but also the year of Debian 11, code-named

bullseye. The release lies ahead,  I had been meaning to write on the above topic for almost a couple of months now but just kept procrastinating about it. That push came to a shove when Sucheta Dalal and Debasis Basu shared their understanding, wisdom, and all in the new book called

I had been meaning to write on the above topic for almost a couple of months now but just kept procrastinating about it. That push came to a shove when Sucheta Dalal and Debasis Basu shared their understanding, wisdom, and all in the new book called  The psyche of the Bengali and the Bhadralok has gone through enormous shifts. I have met quite a few and do see the guilt they feel. If one wonders as to how socialist parties are able to hold power in Bengal, look no further than

The psyche of the Bengali and the Bhadralok has gone through enormous shifts. I have met quite a few and do see the guilt they feel. If one wonders as to how socialist parties are able to hold power in Bengal, look no further than  Over the past days, I have received tons of positive feedback on my previous blog post about forming the Debian BBB Packaging Team [1]. Feedback arrived via mail, IRC, [matrix] and Mastodon. Awesome. Thanks for sharing your thoughts, folks...

Therefore, here comes a short ...

Heads-Up on the current Ongoings

... around packaging BigBlueButton for Debian:

Over the past days, I have received tons of positive feedback on my previous blog post about forming the Debian BBB Packaging Team [1]. Feedback arrived via mail, IRC, [matrix] and Mastodon. Awesome. Thanks for sharing your thoughts, folks...

Therefore, here comes a short ...

Heads-Up on the current Ongoings

... around packaging BigBlueButton for Debian: